elastiesarch

docker run -itd -p 9200:9200 -e PLUGINS="appbaseio/dejavu" itzg/elasticsearch

View the value of Max map count

$ cat /proc/sys/vm/max_map_count 65530

Reset the value of Max map count

$ sysctl -w vm.max_map_count=262144 vm.max_map_count = 262144

Start container again

$ docker start 42d6

# Basic configuration for our connector

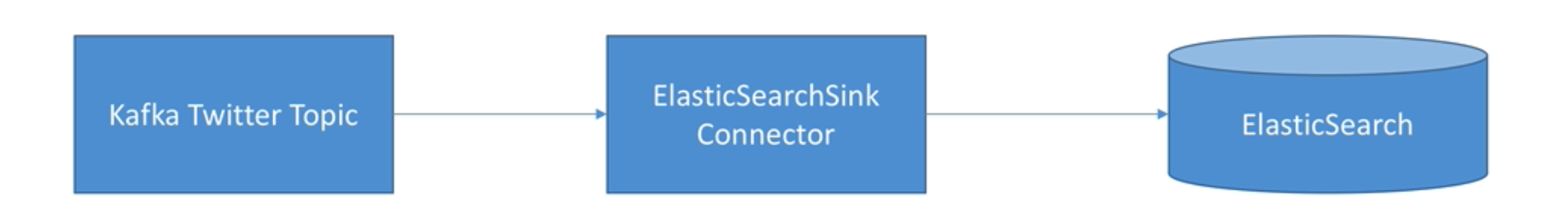

name=sink-elastic-twitter-distributed

connector.class=io.confluent.connect.elasticsearch.ElasticsearchSinkConnector

# We can have parallelism here so we have two tasks!

tasks.max=2

topics=elasticsearch-topic

# the input topic has a schema, so we enable schemas conversion here too

key.converter=org.apache.kafka.connect.json.JsonConverter

key.converter.schemas.enable=true

value.converter=org.apache.kafka.connect.json.JsonConverter

value.converter.schemas.enable=true

# ElasticSearch connector specific configuration

# # http://docs.confluent.io/3.3.0/connect/connect-elasticsearch/docs/configuration_options.html

connection.url=http://172.17.0.3:9200

type.name=kafka-connect

# because our keys from the topics are null, we have key.ignore=true

key.ignore=true

# These are standard kafka connect parameters, need for ALL connectors

name=filestream-for-es

connector.class=org.apache.kafka.connect.file.FileStreamSourceConnector

tasks.max=1

# Parameters can be found here: https://github.com/apache/kafka/blob/trunk/connect/file/src/main/java/org/apache/kafka/connect/file/FileStreamSourceConnector.java

file=es.txt

topic=elasticsearch-topic

# Added configuration for the distributed mode:

key.converter=org.apache.kafka.connect.json.JsonConverter

key.converter.schemas.enable=true

value.converter=org.apache.kafka.connect.json.JsonConverter

value.converter.schemas.enable=true

docker run -p 1358:1358 -d appbaseio/dejavu

open http://localhost:1358/

https://www.confluent.io/hub/confluentinc/kafka-connect-elasticsearch

aws s3 cp ~/Downloads/confluentinc-kafka-connect-elasticsearch-11.1.10.zip s3://broadcast-videos/